Quiztinar is a software tool designed as a result of researching my Master’s of Science Thesis: Leveraging GPT-Based Generative Models for Automated Quiz Generation (Grade 1,0). It helps teachers generate quizzes using the latest GenAI model available only by uploading the syllabus slides. The entire project was researched, designed and developed by me.

The software is free to use under an MIT license and is available on the following repository: https://github.com/ax-mrf/quiztinar (Requires an OpenAI API Key available at https://platform.openai.com)

Abstract of the Thesis:

Recent advancements in Natural Language Processing (NLP) technologies, specifically Large Language Models (LLMs) and their generative capabilities, such as Generative Pre-trained Transformer (GPT) models, have opened up many possibilities for Automated Quiz Generation.

In this research project, I explore the application of using GPT assistants to support course instructors in an educational setting by creating relevant quizzes that align with the educational goals and objectives of the course. Given the incredibly wide array of topics, concepts, and subjects that were used to develop the pre-trained models, GPT-based systems have the potential to assist teachers in saving time across almost any academic field.

However, large language models, while excelling at doing light and general tasks, come with the trade-off of diminishing quality when expected to perform a specific task that requires reasoning to perform.

This research project aims to address this issue by designing an artifact that pushes the limitations of GPT-4o to generate a large number of unique multiple-choice questions, to ensure their comparability to human-made questions in terms of relevance to learning objectives and adherence to accepted multiple-choice question item-writing guidelines.

Improved generated output of LLMs is achieved through prompting or fine-tuning. Since prompting utilizes a lot less resources, my approach to the design involves building a prompting pipeline that effectively produces multiple-choice questions using minimal input from the course instructor.

Developing the software artifact Quiztinar, a user friendly software that communicates effectively with OpenAI through its API, it achieves generated output more aligned with its intended use through a two-step Chain-of-Thought method that forces the model to simulate a step of reasoning before generating the final output.

Results indicate that generating quizzes using the designed pipeline is highly effective and time-saving. However, there is still room for improvement with further development in the pipeline.

Ask for the Full Text by e-mailing: maarouf.alx@gmail.com

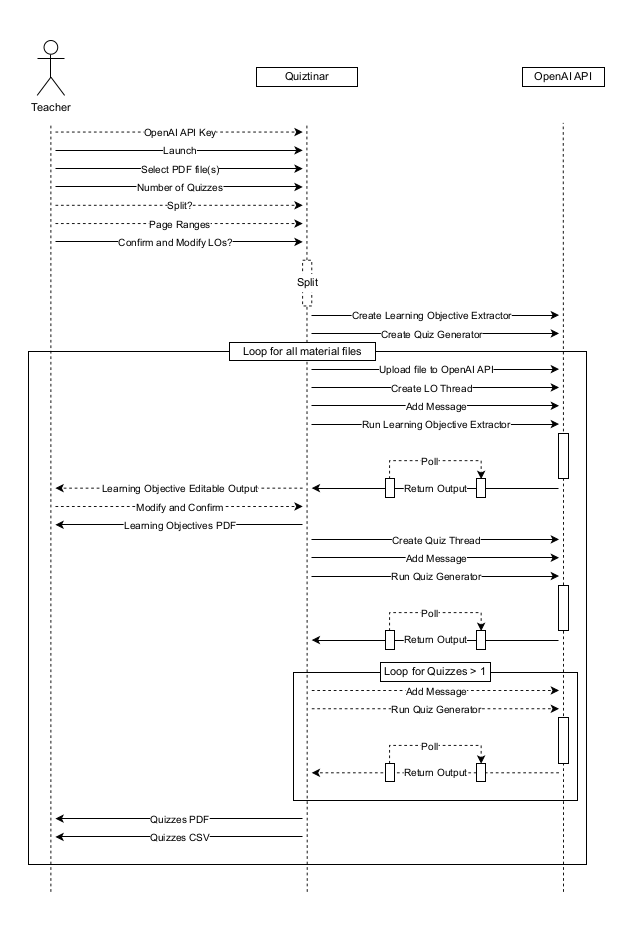

Figure 1 shows the design of Quiztinar.

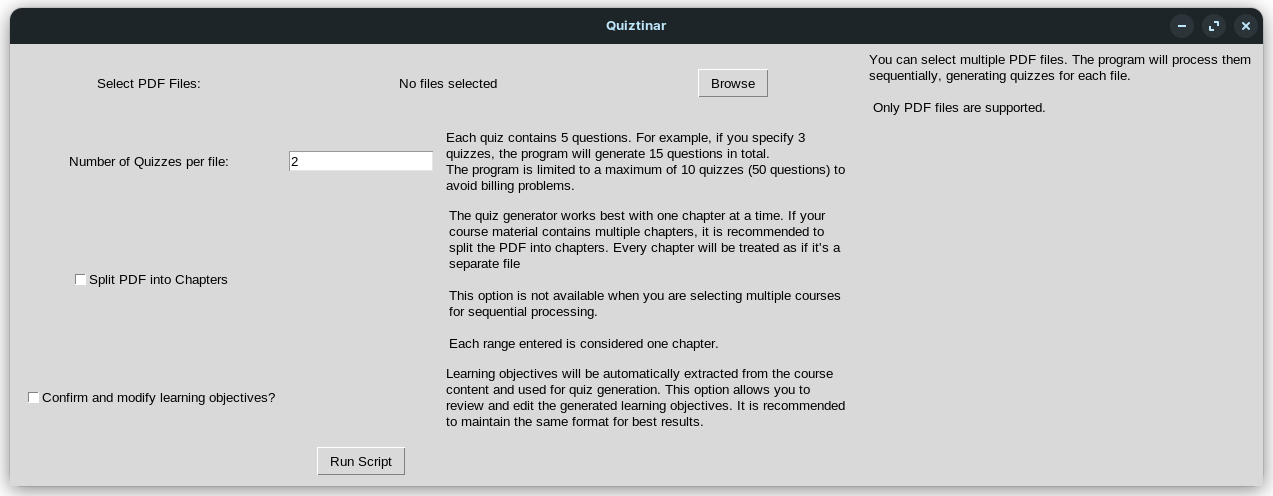

Figure 2 shows the GUI for Quiztinar.

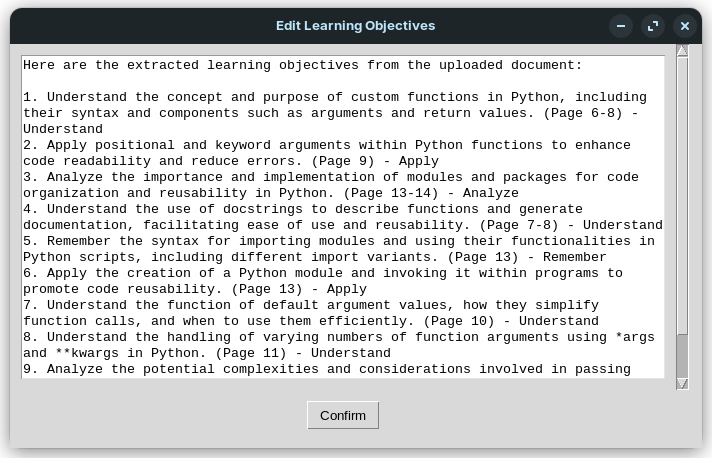

Figure 3 shows an example allowing the user to edit and review the first out of two Chain-of-Thought steps simulating reasoning when generating quizzes.

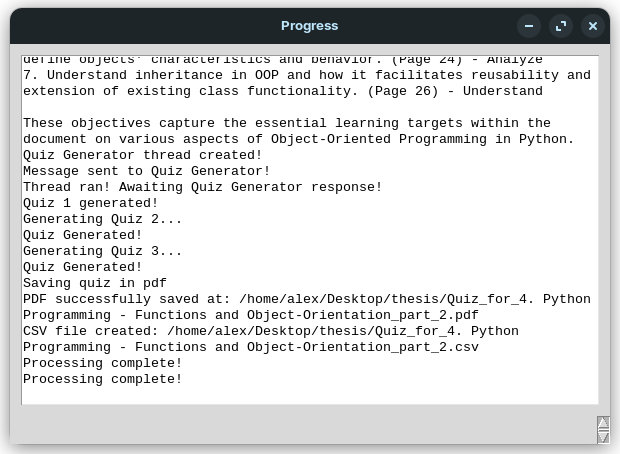

Figure 4 shows the progress window of Quiztinar in action.

Conclusion of the research:

This research explores improvements in automated quiz generation by structuring the process into two key steps:The findings suggest that this method significantly improves quiz quality, reducing flaws in generated items while enhancing relevance to course material. The results also indicate an improvement in the the quality of the generated content, as generated quizzes require students to engage with higher levels of Bloom’s Taxonomy cognitive skills.

- Extracting learning objectives.

- Generating quiz questions based on extracted objectives.

Compared to previous studies, which primarily achieved the remember and understand cognitive process levels, this approach allows for higher-order cognitive skill testing without requiring fine-tuning or extensive manual input.

This research contributes to the ongoing development of AI-assisted educational tools and provides a foundation for future work in automated assessment generation.

Ask for the Full Text by e-mailing: maarouf.alx@gmail.com